by | | blog

In a previous post we discussed about the potential use of DBcloudbin in a reverse way: not for moving content to Cloud but to make Cloud content available to regular (potentially legacy) apps through its relational database. In this new post we will explain in greater detail the technique, with a sample app using Oracle.

The “nibdoulocbd” project consists of using our DBcloudbin solution in an reverse way, to provide our applications with access to documents that we already have stored in the Cloud, with minimal modifications in our application and in our data model. Let’s go with the details…

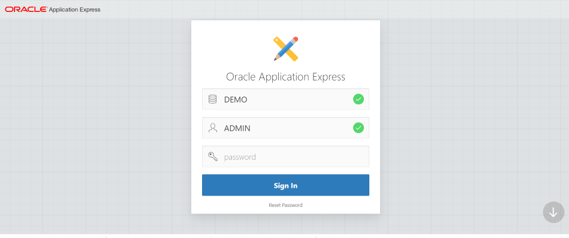

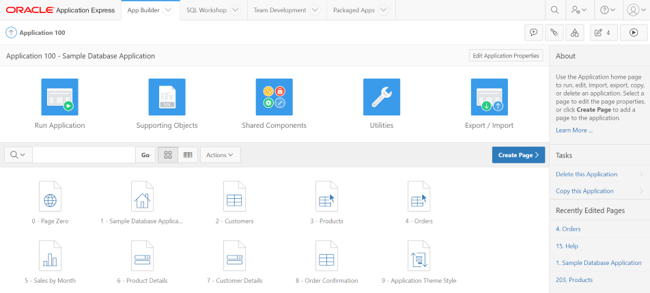

To carry out this “reverse re-engineering” process, we have selected one of the demo applications provided by the Oracle APEX development platform, but this process could be carried out on any of our applications running on Oracle or SQL Server. For simplicity, we configured an Oracle APEX environment on Docker; you can follow the following tutorial.

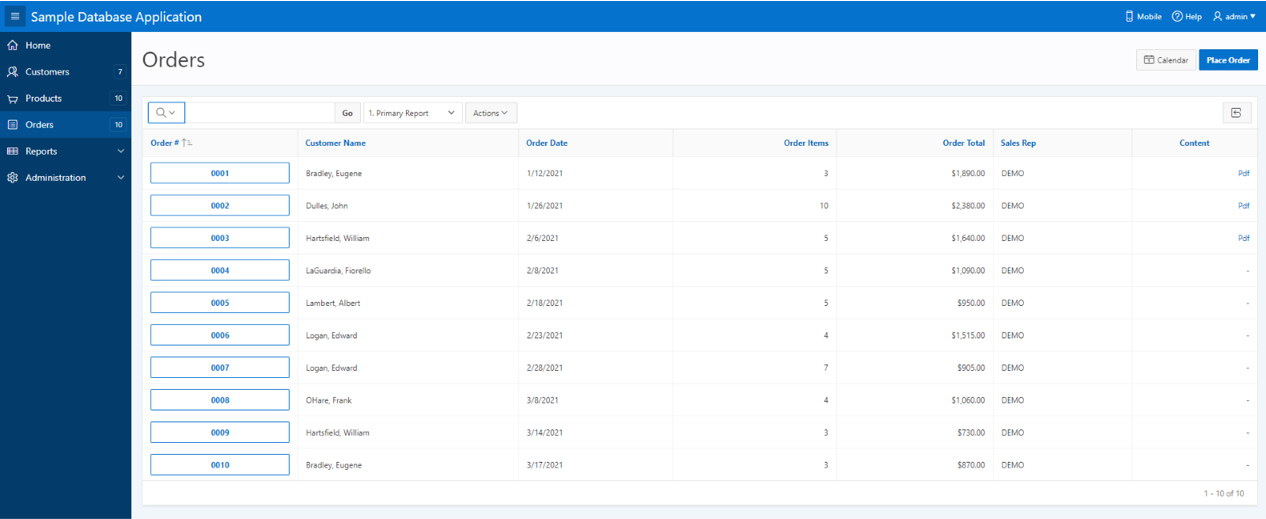

Once we have configured our environment with Oracle Apex 5.4 with a workspace called DEMO, it is time to get down to work. The first thing we will do is access our DEMO workspace through the APEX URL, in our case http://localhost:8080/apex/:

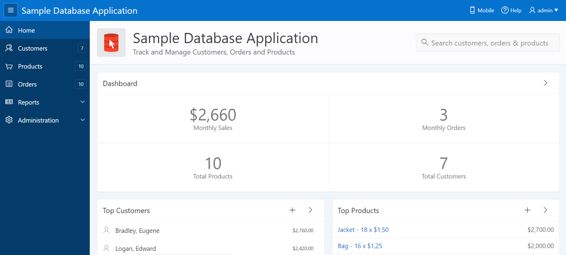

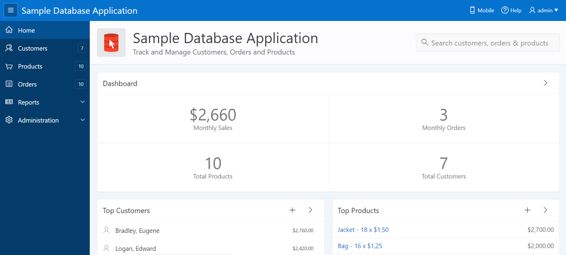

We see the application on which we will base this example “Sample Database Application” that is a sample business application able to manage sales orders (if it does not appear directly, you can install it from the Packaged Apps tab).

Data Model modification

APEX is obviously designed for easy handling of data through the database, but at this point we do not have an attribute (a BLOB type) in the database representing our external Cloud content, so we will have to modify our data model to treat binary data. We would like the user to be able to access the purchase orders in PDF format that we have stored in a S3 bucket in the Cloud from the “Orders” tab in our application; this way our application will handle in the same tab the ‘order data’ already processed with the pdf that we have received with actual order document. This seems a good feature to have.

For this, the only necessary modification in our data model will be to add the necessary fields to the DEMO_ORDERS table to achieve this purpose. This will be as simple as executing the following SQL statement from our preferred SQL client on the DEMO schema.

ALTER TABLE DEMO_ORDERS ADD ( CONTENT BLOB NULL );

Application Modification

NOTE: This is not required in a regular DBcloudbin implementation. In our “flipped over” sample scenario we need it because our application is not currently handling documents (binary content), so we need a minimal application enhancement.

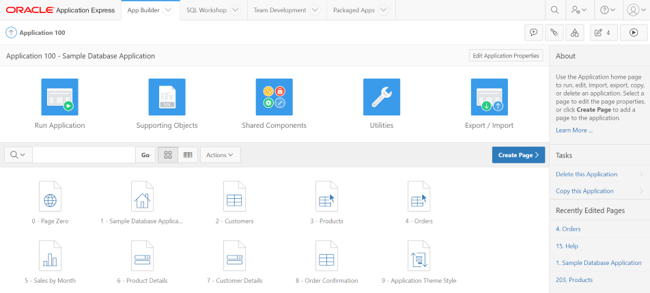

In this section, we will modify our Demo application so that it is able to access and show the user the data “stored” in the columns that we added to our model in the previous step. To do this, we access the APEX App Builder menu. After selecting the edition mode of our application “Sample Database Application”, the design window of our application will appear.

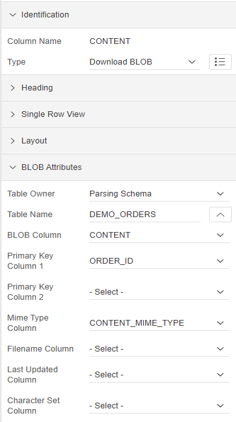

We select the page to modify, in this case our “Orders” page. Once inside the page designer, we will select the Content Body / Orders section, where we will modify the SQL query to show a new column on the page with the link to our PDF documents. We add the following line to the existing SQL statement, which will allow the application to have visibility of the new CONTENT column:

… o.tags tags, sys.dbms_lob.getlength(o.content) content from demo_orders o, …

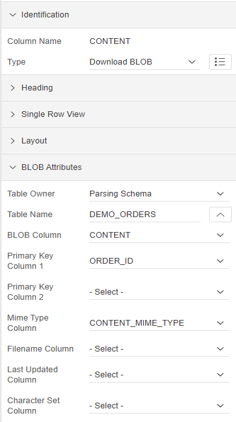

We save the modifications and the new CONTENT column will appear as part of the Content Body of the “Orders” page. When selecting this field in the properties screen we will see the following values:

With these simple steps our application will be ready to access documents stored in our database. But, as we commented previously, the idea was not to access the binary data directly in our database, but rather that what we wanted was to access our documents stored in the cloud, and thus avoid the loading in our database , occupying a huge and unnecessary amount of space in it. This is where DBcloudbin comes into the picture, after a quick and simple installation (you can find how to carry out said installation in the following link), and by selecting the DEMO_ORDERS table as the table managed by the solution, we can access the data of our storage in the preferred cloud (Amazon S3, Google Cloud, Azure,…).

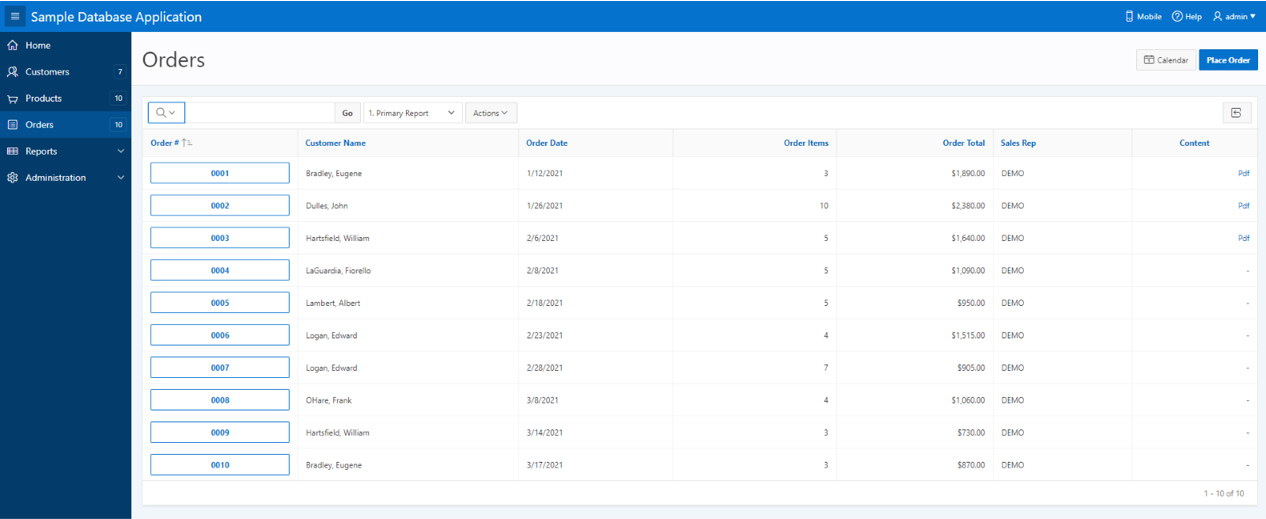

To finish this short tutorial and have access to the cloud data, we will have to perform two last actions:

The first, and as part of the DBcloudbin installation / configuration process, we must modify the configuration of our application so that it uses the new transparency layer generated by the DBcloudbin installer (previously it must have been made visible to APEX from its administration panel). To carry out this modification in our Demo application, we will access its edition menu, from the “App Builder” tab and select the option “Edit Application Properties”:

Where, within the “Security” tab, we can modify the scheme to be used by the application. Therefore, we will modify this scheme so that the application uses the transparency layer generated by DBcloudbin: DEMO_DBCLDBL. After this minimal configuration change, the application will be using the transparency layer generated by DBcloudbin to access the data, accessing it, in the same way as it did until now (without internal modifications of queries or other hassles).

As a last step, and since the movement of data to the cloud has NOT been managed by DBcloudbin, to have access to them, we must manually add the links to each of the PDF documents we want to access. To do this, we must insert these links in the DEMO_ORDERS_DBCLDBL table, belonging to the DBcloudbin transparency layer. In our particular case and assuming that the name of the PDF documents stored in the cloud is the purchase order identifier, we can make a simple insertion with an SQL statement similar to the following:

INSERT INTO DEMO_ORDERS_DBCLDBL SELECT order_id, ‘/<bucket_path>/’||order_id||’.pdf’ from DEMO_ORDERS;

Where the <bucket_path> is the path in our S3 bucket where the documents are located. Once we have all the links inserted in the transparency layer, the documents will be perfectly accessible from our application as if they were contained within the same database:

Hope you find interesting this alternative usage of DBcloudbin solution.

by | | blog

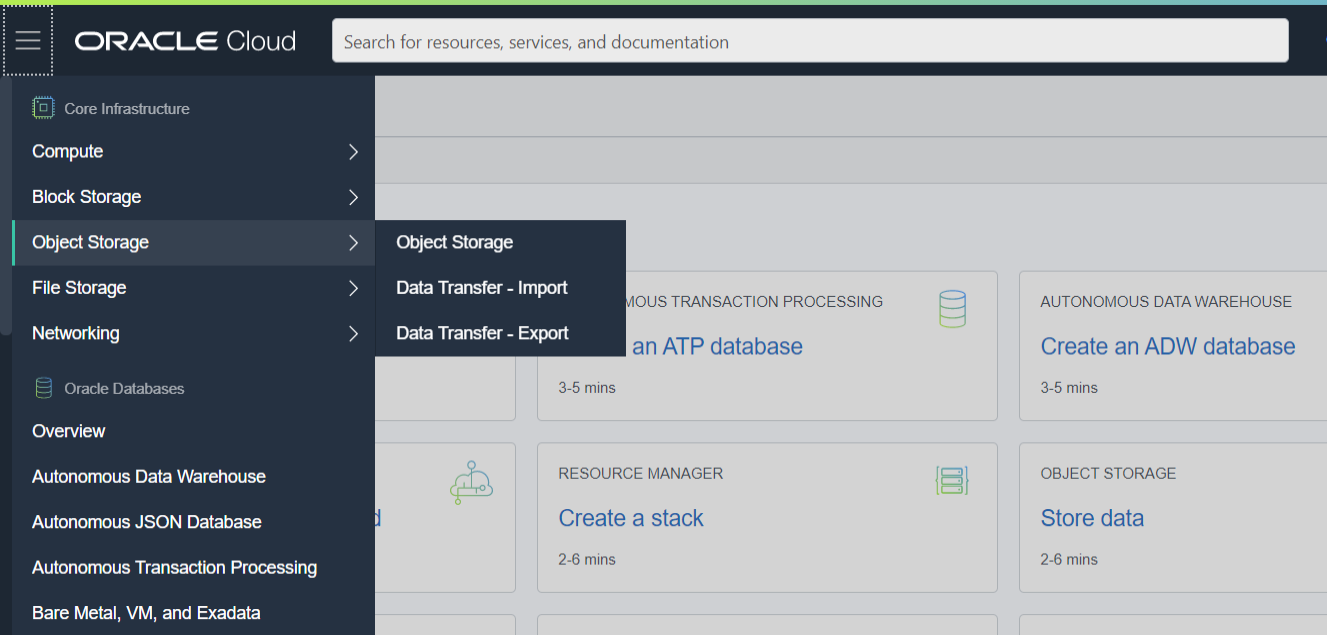

Oracle is pushing hard and committed with its Cloud offering, Oracle Cloud Infrastructure (OCI). It is obviously very optimized for the Oracle product set deployment, specifically databases, including the new and flagship Oracle Autonomous DB version, but it has a full portfolio of the common cloud infrastructure services, including an object storage offering.

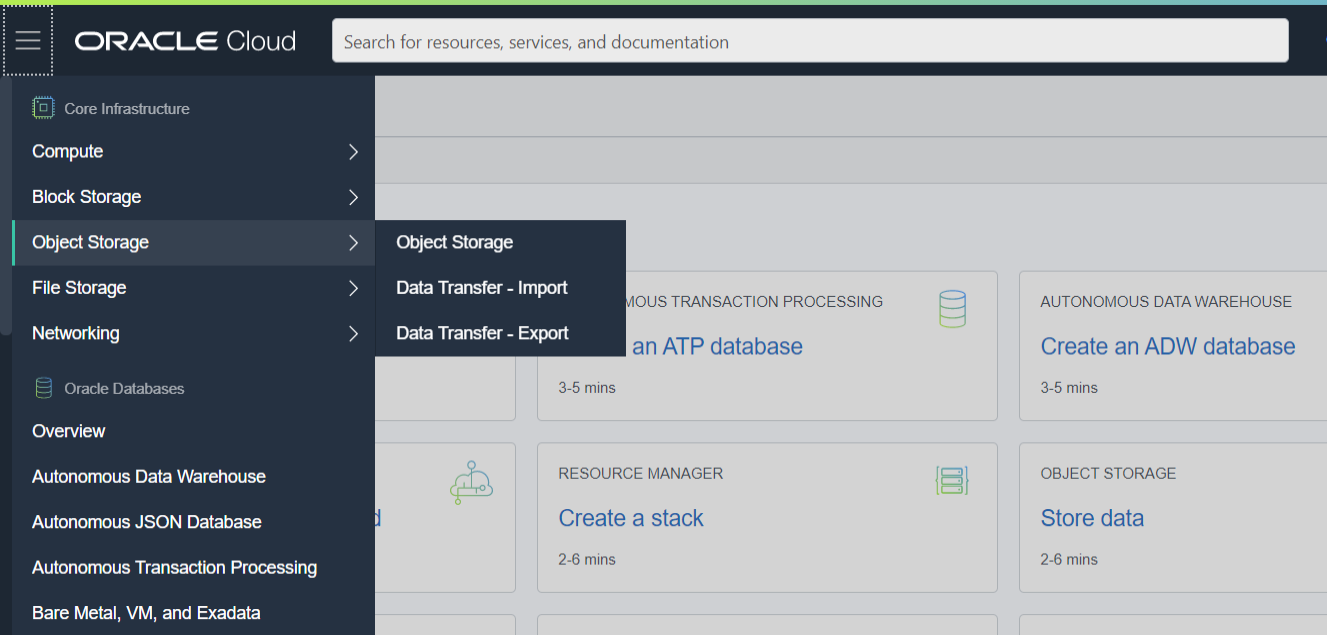

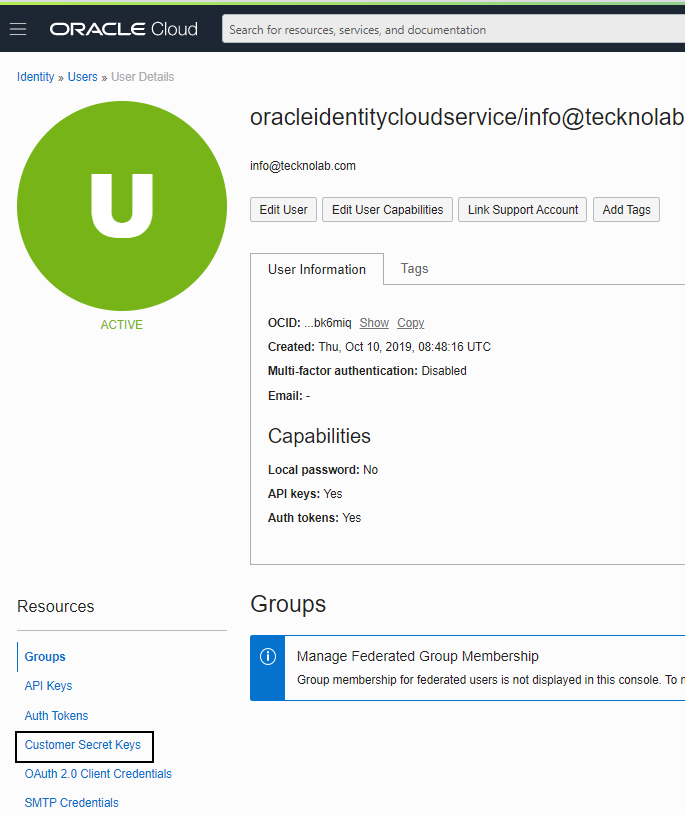

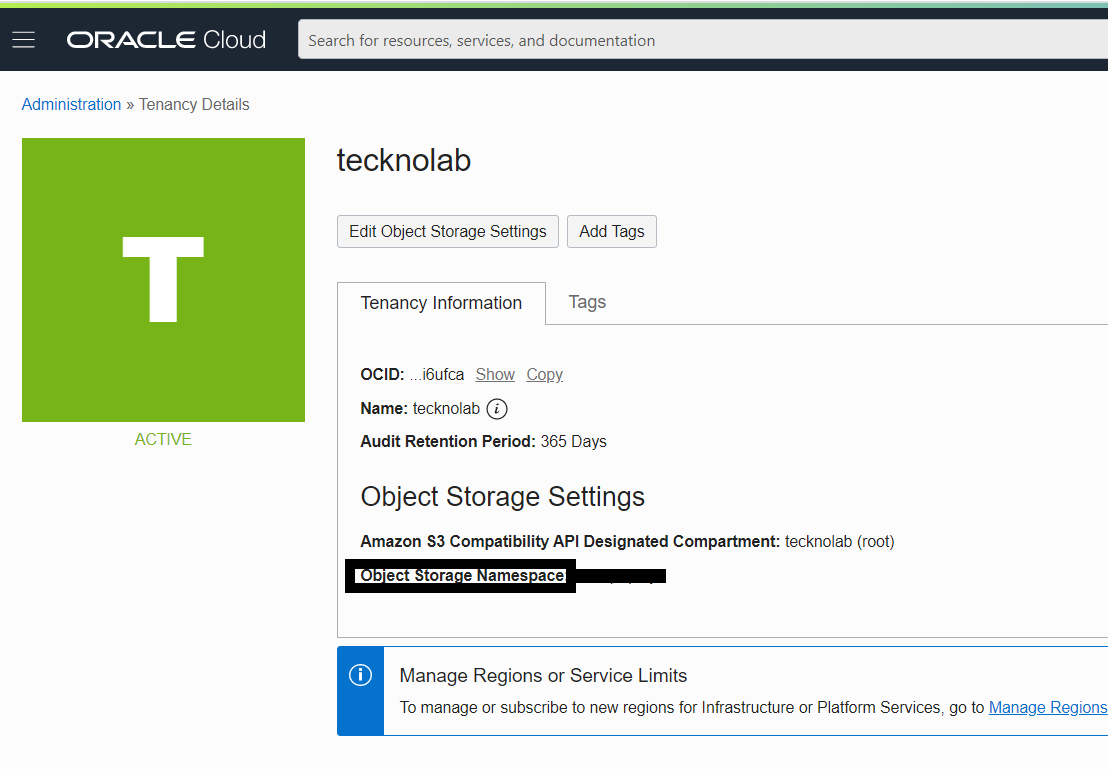

If you are going to use Oracle Cloud Infrastructure (OCI) object storage as repository for DBcloudbin content, please check the general setup instructions or the installation manual for detailed product setup instructions for a general scenario. In addition we will provide here preparation instructions specific to Oracle Cloud. As of today, DBcloudbin does not support the OCI object store proprietary API protocol so we are going to discuss on how to configure the solution for connecting through the S3 compatibility layer.

We assume you have a basic understanding on OCI terminology and components. A good summary can be found here.

In a nutshell, you need the following:

- Go to Oracle Cloud portal / Core Infrastructure / Object Storage and provision a new bucket (by default in the core compartment). Default settings, are fine (private bucket, versioning disabled, encrypt with Oracle-managed keys).

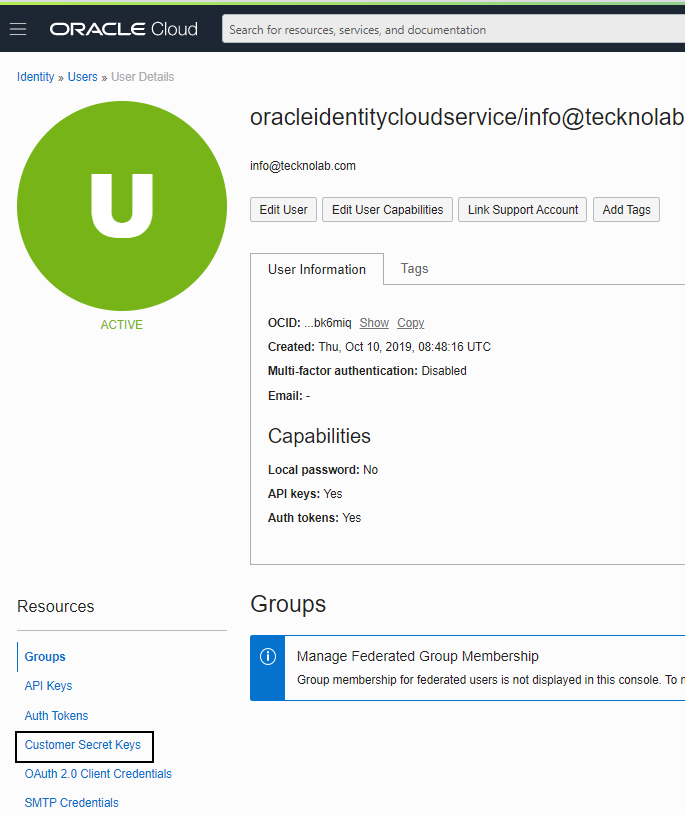

- S3-compatible credentials are required. So, go to user profile in your Oracle Cloud portal (upper right-hand corner) / User settings and select “Customer secret keys”. Create a new key and take note of the Access Key and Secret Key (they are long alphanumeric strings). For security, you may want to do this using a specific user with the strict required capabilities for accessing that new bucket.

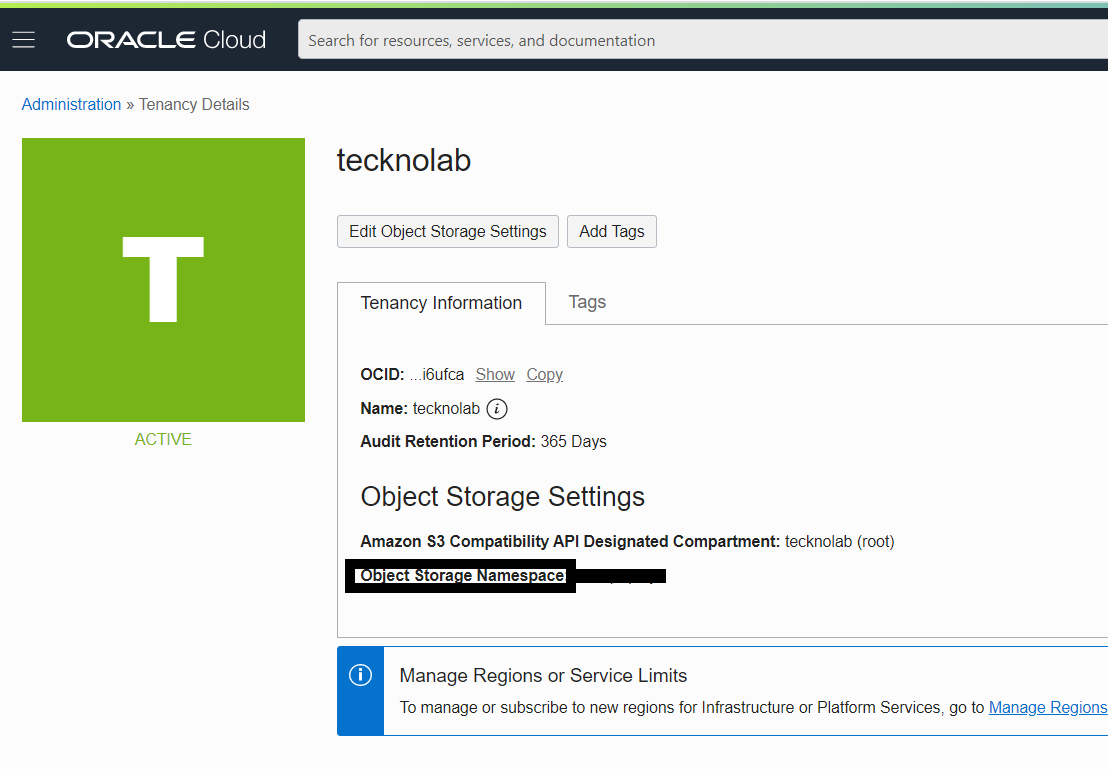

- Check the S3 compatible endpoint address (protocol is https). The endpoint address is in the form <object-store-namespace>.compat.objectstorage.<region>.oraclecloud.com where:

- object-store-namespace is an alphanumeric string that can be found in User profile / Tenancy details.

- region is the OCI region identifier where the object store is provisioned. You can find the region description at the top of your web console. Then, you can go here to find the region id that corresponds to that description.

With all this info collected you are ready for executing the DBcloudbin setup wizard and provide the collected information in the screen where a “S3 Compatible” object store is requested. You will need a DBcloudbin license with S3-compatible service class (by default, the trial one is not; in that case, contact us and request it). The setup will check that it is able to read/write/delete content from the bucket; if any error is found, please write down the error produced and contact support.

In v3.03, we have added support for Autonomous DB in addition to all the regular Oracle DB versions, so you can use our solution with the state-of-the-art, fully OCI based stack, where your application, your database, your DBcloudbin agent and your object store is fully hosted in OCI.

Have fun!.

by | | blog

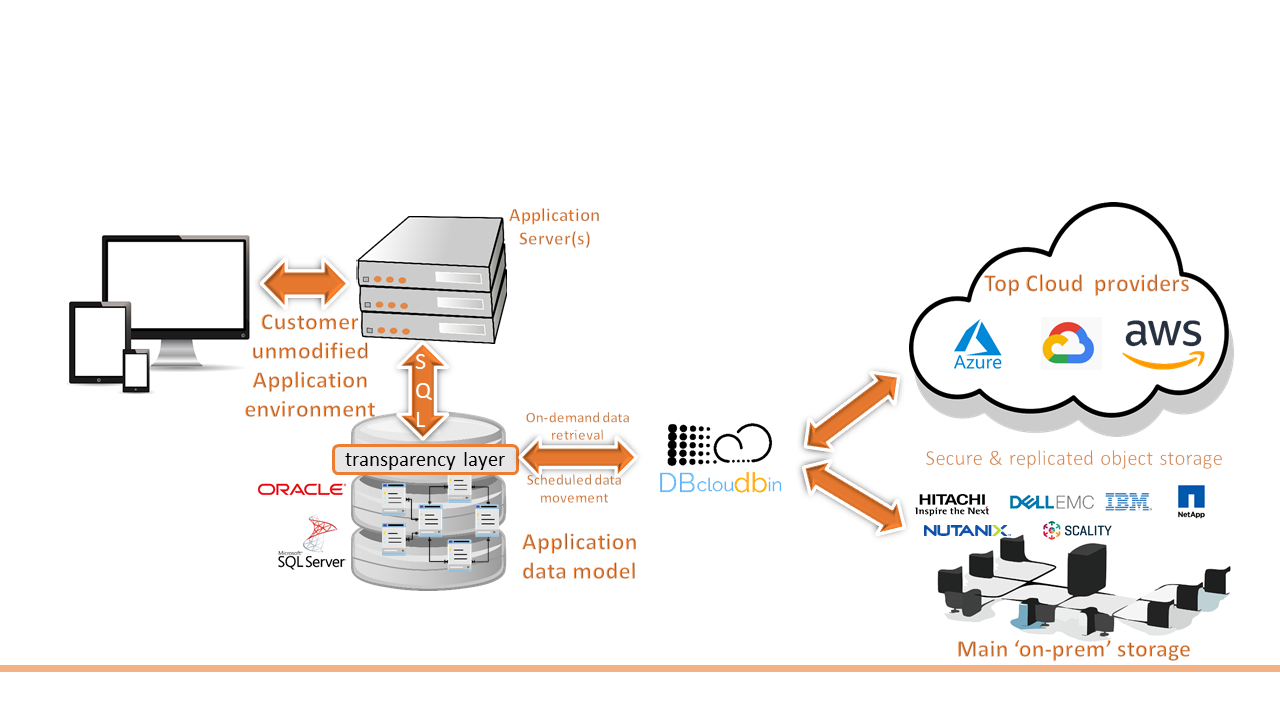

In this post we want to provide the essential vision on the fundamental question: ” Why DBcloudbin?.” You can read it or just take a look on our video where we describe it animated (or both!).

Any business is generating more and more data every day. Being able to deal with it efficiently and at scale is not only important but crucial. We must do it or our competitor will do it and take us out of business.

IT landscape has robust and scalable database technology that has been created decades ago and is used by our line-of-business applications for storing our precious content: business information. Our apps fuel all the company processes and should be dynamic, efficient and fast. Structured data has been always key, but unstructured data and our capabilities for managing it efficiently will make the difference for the ‘new era’ enterprises. This is the cornerstone of new IT, adding value to the business by managing, processing and adding incremental value to ‘dark‘ data, that information our company has but is unable to leverage it.

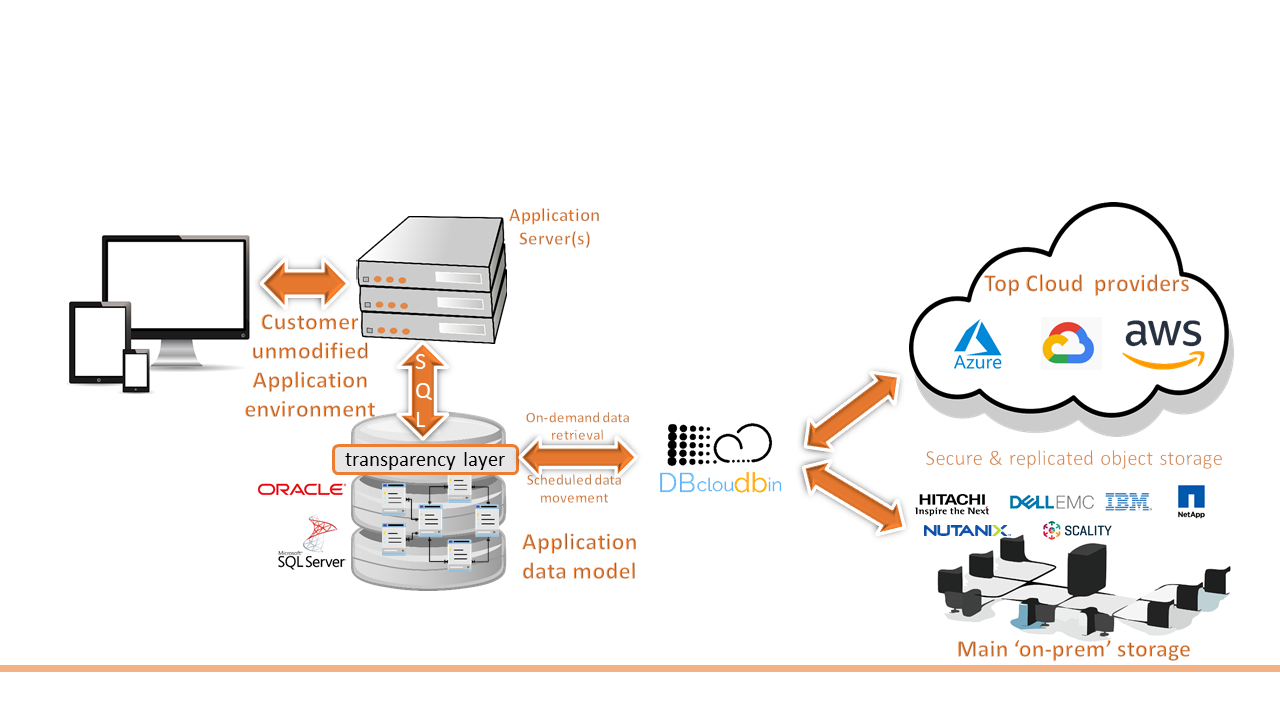

We see databases as the key aggregator and consistent repository that fuel our applications, but not necessarily a one-size-fits-all approach for storing all our content is efficient. Layering data at the right tier while maintaining common access, common security and common reference is a better approach in many cases. We need to store and process every piece of data where it is most efficient and secure, while we maintain a unified view, a consistent catalog.

Traditionally, our applications drive and decide how our data is modelled and stored; even how it is consumed. We believe in unlocking data from applications while maintaining consistency. Data must be opened to other alternative business applications for maximizing value. Cost efficiency and incremental value generation are possible. Data processes transformation without long expensive re-engineering is doable.

That’s why we created DBcloudbin and this is what is driving our roadmap and future innovation. Here you can check the details of how it works today.

by | | blog

DBcloudbin 3.03 added some cool functionalities to our solution. If you need a basic understanding on what is DBcloudbin new update, you have a solution overview section that cover the fundamentals and a DBcloudbin for dummies post series that goes one step back using less technical language. For the “why DBcloudbin?” you may want to review our DBcloudbin vision post.

In version 3.03 we are introducing some important features for the solution:

- Built-in job workers parallelization: With version 3.03.x, when creating a new job for archiving, restoring or purging your DB contents, you can include a -numworkers <n> option with the number or workers thread parallelization requested for the job. This, will parallelize the operation at the DBcloudbin agent and with the additional re-engineering performed in the job execution will provide a significant performance improvement. We have measured gains of 30% due to the optimized data locking strategy in parallelization. Now, affected table row is locked by a single thread that passes the actual execution to a pool of workers. This reduce contention at database, improving the throughput.

- Audit jobs: DBcloudbin is designed for moving content from the database to an external object store. In order to maintain access from the application to the externalized content, an internal link to the object is safely stored at the database. We may need at some point in time to check that this link of catalog remains consistent (it is pointing to real objects at the object store). Even more important, if we are leveraging the DBcloudbin heterogeneous replication (where we can define a primary and replica object store, even using different providers and object store technologies) we may want to fix any missing link (this may happen for example, if we add the replica object store when we already have archived content in the primary one, or when we need to swap the existing object store by a new one, potentially from a different vendor). In all this scenarios, audit jobs are the most effective and efficient solution. You can just execute and audit to report any potential inconsistency or add the -fixprimary and/or -fixreplica options to fix unidirectionally or bidirectionally the missing replicas.

- Improved monitoring: DBcloudbin provides a useful cloud-based operational dashboard (in customer area, “Activity Dashboard” tab) where any customer can review the activity, metrics and potential issues of their database managed instances (those where DBcloudbin has been configured). These dashboards are continuosly improved and in v.3.03 we have added activity events that adds interesting indicators (as the customer application archived content reads). However, some customers due to their own policies prefer to build their on-premises, customized operational dashboard. In order to enable this, we have added a logger in our log4j logging engine, called “ActivityLog“, where all the events that are used for populating the centralized dashboard can be captured and directed to any custom log sink (as for example a syslog destination) an processed for generating an on-premises, custom operational dashboard (e.g. using any popular framework as ELK from Elastic). Keep tuned for a specific blog post where we can show a sample on how to generate such a custom dashboard.

- Support of cloud based DB offerings. DBcloudbin can be used with on-premises or cloud based DB implementations. However, some specific cloud offerings come with restrictions on how the privileged users and permissions are managed or exposed to the customer. We have re-engineered our solution to reduce as much as possible the privileged user requirements on implementation (the product did not already need any privileged user for normal operation, only during setup). Starting in v.3.03 version, we support Oracle autonomous DB in Oracle Cloud, Oracle RDS in AWS and Azure based SQL Server Managed Instances (MI), enhancing our customer choices for deploying the solution.

- Java 11 support: DBcloudbin now officially support Java 11. We have managed to overcome the restrictions due to some 3rd party libraries in our setup tool and now there is no restriction to use Java 11 for installing and running the solution.

There are some other minor features that can be checked in our release notes but these are the most relevant.

by | | blog

For a few years now, everyone has been talking about migrating our data and applications to the cloud, and it is something normal, I would say even natural. Once the stigma of security has been saved, and the skepticism on some companies to remove their data or applications from those bunkers called datacenters, the reality is that the benefits offered by the cloud are difficult to overcome in a local environment. Application re-engineering may be required, however (and this is where DBcloudbin can help, as we will see later).

Cost savings is one of the main benefits, savings in physical infrastructure (servers, network elements, …), savings in management and maintenance of the same infrastructure and savings at the software level (for example, avoiding the acquisition of licenses), are some of the most attractive benefits of moving to the cloud. But its benefits are not exclusively monetary, our applications, data, servers, etc., will also benefit from a complex set of mechanisms and flows that will make them more scalable, secure and with high availability.

From Tecknolab, and through our DBcloudbin solution, we help our customers to take this step, migrating in a simple, secure and transparent way binary data (documents, images, …) from their databases to the main Cloud providers. Reducing your databases and therefore the cost of infrastructure, management and protection of them.

But what if, for example, we already have data in the cloud in an S3 repository or similar and we want to exploit it from an application outside of it?. The obvious and simple answer would be: make the necessary modifications in your application, to be able to interact with the cloud storage systems, using the protocols and APIs that the same providers provide (Amazon S3, Google Cloud, Azure…). Many times this process of reengineering the data access layer of an application is not easy at all, even sometimes the complexity of the application itself, as in legacy applications, makes it unfeasible. In addition, this type of process usually entails certain costs that are difficult to bear for a project of these characteristics.

Therefore, discarding this point, and based on the principle of simplicity, what is likely is that said application already uses a database, and that, if the application already supports binary data processing, its data is capable of supporting such functionality. This is where the nibdoulocbd (DBcloudbin flipped over) project comes in.

The nibdoulocbd project

The nibdoulocbd project is based on a “reverse engineering” of the cloud data, after which and using DBcloudbin as a solution, we will be able to make our application able to have visibility of this data, without a custom cloud integration; maybe with slight modifications in case it is not prepared for the treatment of binary data (which will always be less expensive than having to fully implement an S3 connector, for example). This process will not move the data from the cloud (a priori it is not what we are interested in, although we could even do it through DBcloudbin if necessary), but it will make it accessible from our application in a transparent way.

An example from the real world.

Let’s imagine that we have in an S3 bucket a series of sales order documents in PDF format, stored with the identifier of the order to which they belong and that we want to be accessible as an attachment to that order from our application, that to this day, it does not handle binary data in our database. Well, after installing DBcloudbin in our system, adding small modifications for the handling of a binary field from our application and by inserting in our database the “links” to the document in S3 linked to each sales order, our application will be able to access said data transparently and without altering the size of our database.

In addition, once DBcloudbin is installed in our systems, it will not only allow us to access existing data in the cloud, but it will also provide us with the tools of the solution working in “non-inverse” mode, allowing us to archive or restore data to and from the cloud that are stored in the database of our application.

In a future post, we will provide a hands-on implementation description of this example. Meanwhile, for more details of the solution, visit https://www.dbcloudbin.com/solution

by | | blog-dummies

In this blog series we’ll try to explain DBcloudbin for our non-technical audience.

We believe it is a great product and we want to open it and allow a reasonable understanding for those that are not familiar with the data management and database concepts, helping with this blog. In this first post, we will deal with the basics, structured in 5 topics. In the next one, we will introduce the basic problem of many applications that DBcloudbin comes to solve. Let’s start…

1.- How a typical enterprise application works.

For those used to work with applications running in their phone or laptop, enterprise applications are substantially different in most cases. They usually run in a centralized infrastructure where an application server is executing the application intelligence and the users are connecting to it remotely, in many cases through an web interface (so accessing a ‘well-known’ web page). You enter the URL, log in with your credentials and start using it for your daily duties. This application infrastructure behind the scenes can be just one computer with all installed in, or dozens of servers with different roles and external storage infrastructure and communications networks for providing a complex IT service.

2.- How data is stored for an application working properly.

Any non-trivial application deals with data and is the result of taking some data as input, executing a defined process with it, and generating some output data. This data has to be stored somewhere. There are multiple options but the most common situation is having a database, that is in fact an subsidiary application that provides this capability.The database is able to provide some very interesting services on top of safely storing data as is providing a way to structure and query that information, validate its formal representation (e.g. make sure that a customer record is only stored if it contains an attribute with “First Name” and “Last Name”), handle the simultaneous access of several applications to the same data in a unambiguous way, among many others.

The most used database type is what we call relational database where data is structured in tables as in an Excel spreadsheet. Databases provide a way for applications to read and write data; since those operations need high flexibility it is done providing a formal language; this way, an application can ‘talk’ to the database an tell exactly what it needs.

The most used language is called SQL. In computers, the language normally has more strict rules than in human language, both syntactically and semantically. If an application request the “contracts closed on last Monday” our application should have beforehand instructed our database that there is something called “contract”, they have an attribute that can hold the value “closed” and other attribute that indicates when the operation was performed; otherwise, the application receives an error.

3.- How is data protected in a database.

Any database software (remember a database is just another application) will physically store the information in media based on their own proprietary criteria. Nobody else than the database software needs to know it. However, we need a way to store a copy of that data anywhere else to be able to restore the database content in the case of any disaster. This will require the integration of the database software with another software (provided by the database manufacturer or not) able to extract a copy of that data for protection in an independent media (this is called backup software). When the database is large, this process takes time. Restoring the database in the case of problems, will take also significant time, with the additional trouble that in that case we will not being able to have our application running (so, no service provided to our users).

4.- What are the types of storage.

Keeping it simple, we have three basic types of storage from an access perspective (from a physical perspective there are other classifications but it is less relevant for our purpose):

- Block storage: This is the older and most common type. In this case a repository of data is somehow assigned to a computer and the operating system of this computer will create the physical structure to handle raw data in this repository (or ‘disk’). This is what happens with the disk that our laptop has installed for operating and storing our documents. In large enterprise environments those disks (or many of them) are not physically inserted in a computer, but assigned to a computer from a centralized pool of storage accessed through a special storage network. This is the most common way of serving storage to the server where the database application is running; so the database application consumes this type of storage for saving the data that its client applications ask to be persisted.

- Networked File storage: In this case, the storage tier provides an additional level of service and is able to provide a filesystem, so a higher level way of structuring our files in folders, that can be accessed from several servers. It is commonly used for providing a file storage service to users where they can save their documents and other stuff. It uses protocols with similar primitives but differences for Windows and Linux systems that generate some interoperability challenges.

- Object storage: Is the newest type of storage where we can store content (objects) in a common namespace where objects are identified by its name. It is based on a significantly different approach in the sense that is not the operating system of the server that deals with the storage, but the applications running on top of the operating system, dialoguing directly with the storage gear using a defined protocol. The most common and becoming a de-facto standard is the S3 protocol, implemented by the S3 service (Simple-Storage-Service) provided by Amazon Web Services. Now, there are many different storage manufacturers providing a similar service, most of them implementing the same protocol for interoperability.

5.- How is all this related with the Cloud.

Cloud computing is a way to consume computing, storage and networking services without having to deal with the actual infrastructure. In general, the Cloud provider can deliver IT services at a many levels of abstraction (pure infrastructure, application platforms, end-user ready to consume software, …). Moving to the Cloud is in general a complex task for non-trivial workloads when we need to provide a business continuity to our IT services as it is the normal scenario in enterprises. Large databases that support critical LoB (line-of-business) operations are probably the toughest scenario since any large datasets have specific challenges starting by the nature that transferring large amounts of data requires a significant amount of time. Information is also a key asset for any company so it opens additional aspects as security, privacy, protection, ….

If you want learn more things, there are more articles talking about this topic. Check HERE